How to Write Tests That Actually Catch Bugs

Stop writing tests that look good but miss real bugs. Learn the proven strategies that help teams catch more production issues by testing what actually breaks, not just what should work.

Every developer has experienced the frustration of having comprehensive test coverage that still fails to catch critical bugs in production. You've tried unit tests, integration tests, end-to-end tests, and maybe even test-driven development. You've written hundreds of tests, achieved 90%+ coverage, and yet somehow, real users still encounter issues that your test suite never caught. This disconnect between test quantity and test quality is one of the most common challenges in software development.

This article identifies the key patterns that separate effective tests from those that merely check boxes. The truth is, most teams focus on testing the happy path and obvious edge cases, but real bugs often hide in the interactions between components, timing issues, and unexpected user behaviors.

Let me share the strategies and techniques that have helped teams write tests that actually catch real bugs before they reach production.

Why Most Tests Don't Catch Real Bugs

1. Testing Implementation, Not Behavior

The most common mistake is writing tests that verify how your code works, rather than what it should do. This creates brittle tests that break when you refactor, even when the behavior remains correct.

This is exactly why Test-Driven Development (TDD) and Behavior-Driven Development (BDD) are so powerful - they force you to think about behavior first, not implementation details.

Real-World Example: A team I worked with had extensive tests for their user authentication system. They tested every method call, database query, and validation rule. However, when a user tried to log in with a recently changed password, the system failed because the tests never covered the scenario where a password change occurred during an active session.

The tests were checking that the authentication method called the password validator, but they weren't testing the actual user experience of password changes during active sessions.

2. Focusing on Happy Path Scenarios

Most test suites cover the expected user flows but miss the unexpected ones. Real bugs often occur when:

- Users perform actions in unexpected orders

- Network conditions are poor or intermittent

- Data is corrupted or malformed

- Multiple users interact with the same data simultaneously

- System resources are constrained

3. Ignoring Integration Points

Unit tests are essential, but they often miss bugs that occur at the boundaries between components. Real bugs frequently happen when:

- APIs return unexpected responses

- Database transactions fail partially

- External services are slow or unavailable

- Data formats change between systems

- Authentication tokens expire unexpectedly

How to Write Tests That Catch Real Bugs

Step 1: Start with Real Bug Reports

The best way to learn what to test is to analyze actual bugs that have occurred in your system.

Practical Approach:

- For each new bug report, write a test that would have caught it

- Categorize bugs by type (data corruption, timing issues, integration failures, etc.)

- Identify patterns in what was not tested

- Create test templates for common bug patterns you encounter

- Review recent production issues and ensure tests exist for similar scenarios

Why This Works: Every production bug represents a real scenario your tests missed. By writing tests for each bug you encounter, you're essentially teaching your test suite to recognize the patterns that lead to real failures.

Step 2: Test User Journeys, Not Just Functions

Instead of testing individual functions, test complete user journeys that represent real usage patterns.

Before (Function Testing):

test('validateEmail should return true for valid email', () => {

expect(validateEmail('[email protected]')).toBe(true);

});After (User Journey Testing):

test('user can complete registration with valid email', async () => {

const user = await registerUser({

email: '[email protected]',

password: 'securePassword123'

});

expect(user.email).toBe('[email protected]');

expect(user.status).toBe('active');

expect(user.verificationEmailSent).toBe(true);

});Step 3: Implement Property-Based Testing

Property-based testing generates random inputs to test your code against properties that should always hold true.

Example: Instead of testing specific email formats, test that any valid email follows certain properties:

import * as fc from 'fast-check';

test('email validation properties', () => {

fc.assert(

fc.property(fc.emailAddress(), (email) => {

const result = validateEmail(email);

expect(result).toBe(true);

// More robust email validation checks

expect(email).toContain('@');

const [localPart, domain] = email.split('@');

expect(localPart.length).toBeGreaterThan(0);

expect(domain.length).toBeGreaterThan(0);

// Check for valid domain format (either has dot or is localhost)

expect(domain.includes('.') || domain === 'localhost').toBe(true);

})

);

});Step 4: Add Chaos Engineering to Your Tests

Introduce controlled failures to test how your system handles real-world problems.

Common Chaos Scenarios:

- Network latency: Simulate slow network connections

- Service failures: Mock external service outages

- Resource constraints: Limit memory or CPU during tests

- Data corruption: Introduce malformed data

- Concurrent access: Simulate multiple users accessing the same resources

Implementation Example:

test('handles slow database connections gracefully', async () => {

// Mock database to simulate slow response

vi.spyOn(database, 'query').mockImplementation(() =>

new Promise(resolve => setTimeout(resolve, 5000))

);

const result = await userService.createUser(userData);

expect(result.timeout).toBe(false);

expect(result.user).toBeDefined();

});Step 5: Test Boundary Conditions and Edge Cases

Real bugs often occur at the boundaries of your system's capabilities.

Key Areas to Test:

- Data limits: Maximum file sizes, string lengths, array sizes

- Empty/null values: How your system handles missing data

- Special characters: Unicode, SQL injection attempts, XSS payloads

- Date/time edge cases: Leap years, timezone changes, DST transitions

- Numeric boundaries: Integer overflow, floating-point precision

Example:

test('handles large file uploads correctly', async () => {

const largeFile = Buffer.alloc(100 * 1024 * 1024); // 100MB

const result = await fileService.upload(largeFile);

expect(result.success).toBe(true);

expect(result.size).toBe(largeFile.length);

});Step 6: Implement Concurrency Testing

Real bugs often occur when multiple operations happen simultaneously. Race conditions and parallel operations can cause data corruption, inconsistent states, and unexpected failures that are impossible to reproduce in single-threaded tests.

Common Concurrency Issues:

- Data races: Multiple threads accessing shared data simultaneously

- Deadlocks: Operations waiting for each other indefinitely

- Resource contention: Multiple processes competing for limited resources

Implementation Example:

test('handles concurrent user registrations correctly', async () => {

const email = '[email protected]';

// Simulate multiple users trying to register with the same email

const promises = Array(5).fill().map(() =>

userService.registerUser({ email, password: 'password123' })

);

const results = await Promise.allSettled(promises);

// Only one registration should succeed

const successfulRegistrations = results.filter(r => r.status === 'fulfilled');

expect(successfulRegistrations).toHaveLength(1);

// Others should fail with appropriate error

const failedRegistrations = results.filter(r => r.status === 'rejected');

failedRegistrations.forEach(result => {

expect(result.reason.message).toContain('Email already exists');

});

});How to Check If Your Tests Are Catching Real Bugs

Implement Automated Regression Testing

Automated regression testing ensures that new changes do not break existing functionality.

Techniques:

- Snapshot testing: Capture and compare component outputs

- Visual regression testing: Detect UI changes automatically

- API contract testing: Verify API responses remain consistent

- Database migration testing: Ensure schema changes do not break existing queries

Implementation Examples:

Snapshot Testing:

test('user profile component renders correctly', () => {

const user = { name: 'John Doe', email: '[email protected]' };

const { container } = render(<UserProfile user={user} />);

expect(container).toMatchSnapshot();

});API Contract Testing:

test('user API maintains contract', async () => {

const response = await fetch('/api/users/123');

const user = await response.json();

// Verify required fields exist

expect(user).toHaveProperty('id');

expect(user).toHaveProperty('name');

expect(user).toHaveProperty('email');

// Verify data types

expect(typeof user.id).toBe('number');

expect(typeof user.name).toBe('string');

expect(typeof user.email).toBe('string');

});Database Migration Testing:

When you modify your database schema (adding columns, changing data types, or restructuring tables), automated tests ensure that existing application code continues to work with the new structure.

test('existing queries work after schema changes', async () => {

// Test that existing queries still work

// Note: Adjust column names and data types based on your database schema

const users = await db.query('SELECT * FROM users WHERE status = ?', ['active']);

expect(users).toBeDefined();

expect(Array.isArray(users)).toBe(true);

// Test that new schema fields are accessible

// Use parameterized queries to prevent SQL injection

const userWithNewField = await db.query(

'SELECT id, name, email, created_at FROM users WHERE id = ? LIMIT 1',

[1]

);

expect(userWithNewField[0]).toHaveProperty('created_at');

});Analyze Production Logs and Metrics

Use production data to identify what scenarios your tests should cover.

Data Sources:

- Error logs: What errors occur most frequently?

- Performance metrics: Where are the bottlenecks?

- User behavior analytics: What paths do users actually take?

- A/B test results: How do different implementations behave?

Review Test Coverage Quality

Go beyond line coverage to measure the quality of your test coverage.

Coverage Types:

- Line coverage: Which lines of code are executed

- Branch coverage: Which code paths are taken

- Function coverage: Which functions are called

How to Review Coverage Quality: Start with line coverage as a baseline, then focus on branch and condition coverage for critical paths. High coverage in these areas often correlates with better bug detection.

Metrics for Test Quality:

- Deployment Frequency: High frequency (daily/weekly) often indicates confidence in automated tests

- Change Failure Rate: Low failure rates in production indicate tests are catching real bugs

Practical Indicators:

- Deployment Confidence: Can you deploy without manual testing?

- Rollback Frequency: How often do you need to rollback due to bugs?

- Test Execution Time: Fast test suites enable quick feedback

- Flaky Test Rate: Low flaky test rates indicate reliable test suites

Building a Culture of Effective Testing

1. Make Testing Part of the Definition of Done

Every feature should include tests that would catch the most likely bugs for that feature.

2. Regular Test Quality Reviews

Schedule regular sessions to review test quality and identify gaps.

3. Learn from Production Incidents

After every production incident, ask: "Could our tests have caught this?"

4. Share Testing Knowledge

Create a testing playbook and share best practices across the team.

Conclusion

Writing tests that catch real bugs requires a shift in mindset from quantity to quality. It's not about achieving 100% coverage or writing the most tests possible. Instead, focus on understanding what can go wrong in your system and writing tests that specifically target those scenarios.

The most effective test suites are those that evolve based on real-world usage and actual bug reports. By starting with real problems, testing user journeys, and continuously improving based on production data, you can build a test suite that actually protects your users from bugs.

This approach naturally aligns with TDD and BDD methodologies, where the focus is on behavior-driven testing rather than implementation-focused testing. When you combine the strategies from this article with TDD/BDD practices, you create a powerful testing foundation that catches real bugs while maintaining code quality.

Remember, the goal isn't to eliminate all bugs - that's impossible. The goal is to catch the most impactful bugs before they reach production, and to have confidence that your tests will catch similar issues in the future.

Start by analyzing your recent bug reports, implementing the strategies outlined above, and measuring the impact on your test effectiveness. You'll be surprised how quickly you can transform your test suite from a coverage metric into a genuine quality assurance tool.

Related Articles

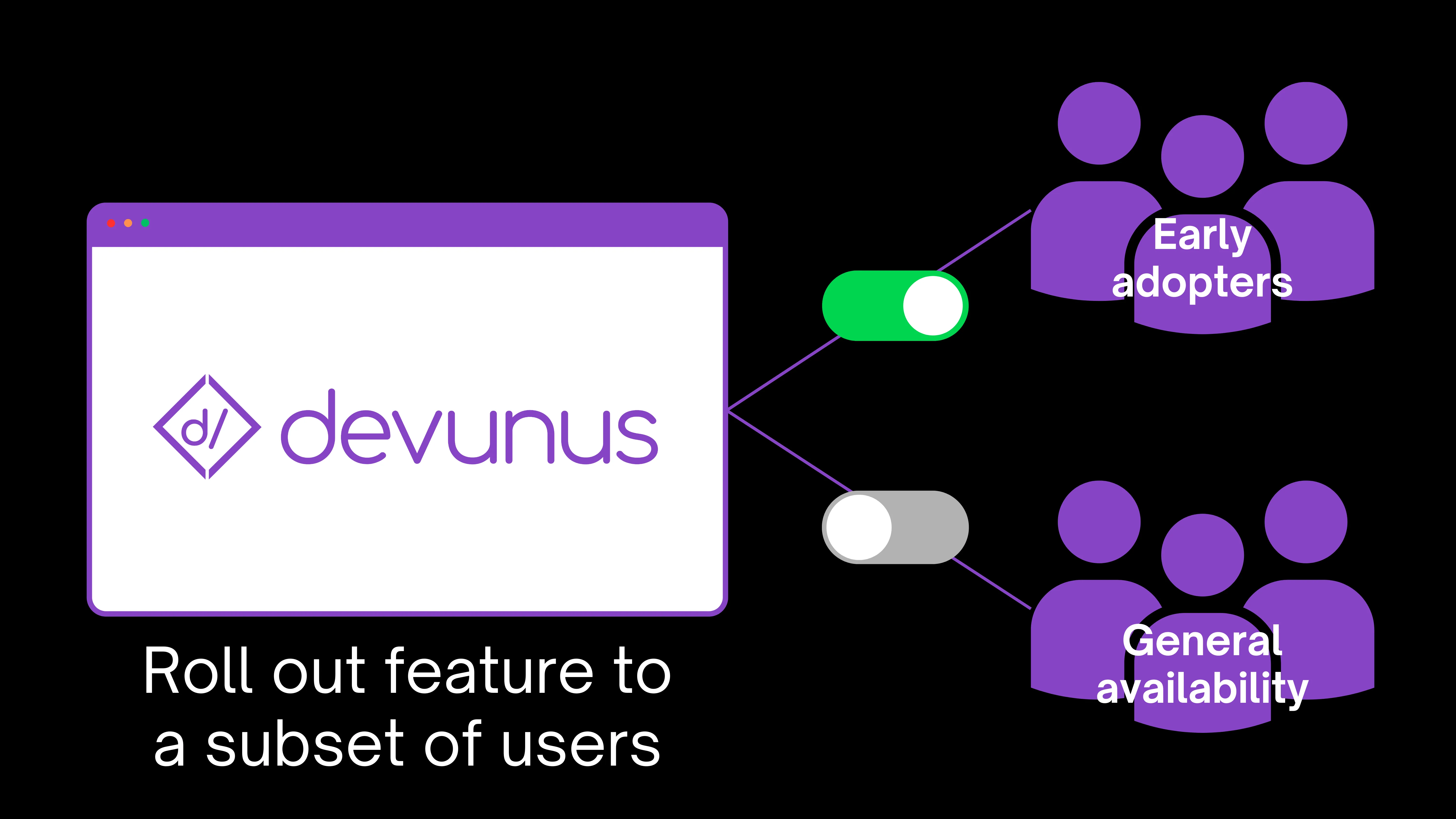

What Are the Benefits of Feature Flags? A Complete Guide for Developers

By integrating feature flags, teams can manage and roll out new features with unprecedented precision and control, enabling a more agile, responsive development process.

Common Technical Debt Issues and Strategies to Improve Them

A comprehensive guide to identifying, understanding, and resolving the most common technical debt patterns in software development